After 11 years doing Quality Assurance and Test, in both the public and private sectors, I have learned that testing is overrated, testers are lazy, talking is underrated and waterfalls, while pretty, are not always fun to negotiate. These are all positive things.

Underpinning all of this is a simple mantra that I trot out to every team I work with: if we all talk to each other openly, we’re far more likely to succeed. This post aims to illustrate why communication and common sense are much better than robotically following processes.

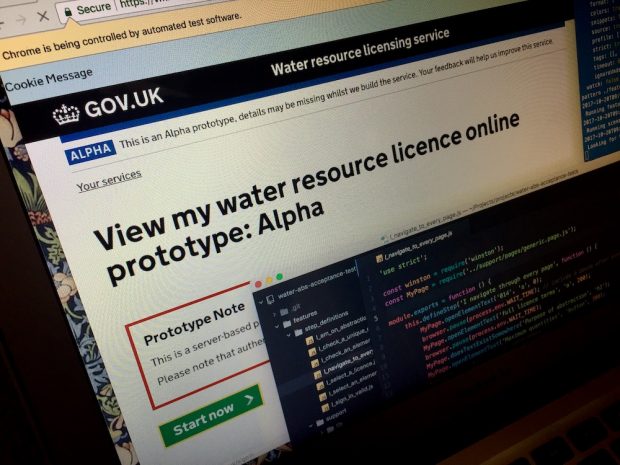

Our team is currently working on a service to track water abstraction licences, for people who need to take large amounts of water out of the environment, say, for drinking or farming. (Currently this is done using paper forms like these.) My role is ensuring that what we design and build meets user needs. We can’t do this without talking to our users from the start, and to each other. You’d be amazed how often this isn’t done properly.

"Quality Assurance" versus "Test"

I use the terms “QA” and “test” interchangeably, but there is a key difference. A tester traditionally finds defects in response to something that’s been developed elsewhere. A QA person uses communication to anticipate defects before they happen. For example, we will challenge upfront by asking questions such as: “How will we include users with access needs?”, “What happens if you enter the wrong thing in this field?”, or “Should we even be doing this at all?” These seemingly innocent questions can stop a team heading off in the wrong direction.

Different kinds of scripts

Quality assurance people make use of things called “scripts” to get tests done. There are 2 kinds of script: manual, and automated.

A manual script is a set of tasks, written down in a particular order. Like the script in a play that tells the actors what to say on stage, it tells people what steps they should take in order to check that a particular piece of functionality is working. Manual scripts are especially helpful where the software is intricate or safety-critical.

Automated scripts are lines of code (in our case, Javascript) which tell a browser to do simple things, like “type a password in the password box” or “check that there’s a heading with the word ‘abstraction’ in it”. By programming these steps, we can run them whenever we like in a matter of seconds, to check that we haven’t just broken something with our last code update. A bit of effort up front is common sense, to save repetition later on.

This is useful if we want to do a regular check that, say, all of the links work while checking 12 browsers simultaneously. Doing this manually would be very, very dull.

Testing is overrated - why script, when you can explore?

The traditional way of testing a service’s functionality is to write a series of manual scripts. They might look something like: “Step 58. Description: click button. Expected result: button is clicked”.

By the time you’ve written this in a testing tool and marked it complete, you could have clicked the button with a mouse, repeated it with a keyboard (to check it’s accessible without a mouse), and clicked it again having deleted some field information to generate an error.

If you feel you need to check a part of it again because ‘it smells a bit funny’, you can make a brief note of it and chat to the developer to see if there’s an underlying issue.

You triple your chances of finding a defect by exploring, relying on feelings and talking, than blindly scripting in isolation. It’s also far more interesting.

Testers are lazy - robots can do the dull bits

When we do test with automated scripts, we try to let the software do the hard work for us, ‘borrowing’ useful code from other projects.

I recently scripted my first half-decent automated tests (using a mixture of Cucumber, Webdriver.io, Selenium, Browserstack and Node.JS). Getting my head around Javascript was the biggest learning curve. Given some steps A, B and C, a polite language (such as Ruby) would dutifully run A, then B then C. Javascript, however, sniffs disdainfully at A, does C if it feels like it, and doesn’t even look at B until some arbitrary thing happens. Additionally, the documentation is rarely helpful to someone as un-technical as me.

Luckily we have some nice developers nearby happy to explain things like asynchronous code, callbacks and closures. Testers and developers talking and helping each other out, rather than trying to outwit each other and score points, keeps us all happy. That’s what I mean by communication and common sense.

Talking is underrated - communication leads to quality

I’ve seen enough projects fail due to communication barriers and, ironically, the fear of failure. We need to work in the open. That includes our code, our user stories and bugs, our mistakes, our challenges and our successes.

Sending overly-sanitised email reports to management is poor communication and a huge, unnecessary overhead. We should always “show the thing” we’re building instead, asking for honest feedback to help us learn and improve.

Agile means talking

The shift to agile working in government has been eye-opening for me. The traditional way of working, waterfall, relies heavily on plans and processes that can rarely be followed. As my colleague David Thomas pointed out in his post here a few weeks ago, service development is complicated and unpredictable:

Predictions can be made at a high level, but typically plans are out of date within weeks.

Advance planning rarely accounts for user needs, and they don’t get identified until research work has begun.

Agile means adapting and delivering in small increments to meet changing user needs. We can think for ourselves, be creative and have a sense of humour. Above all, we are given the space to communicate what’s on our minds. Waterfall to agile is like moving:

- from carefully scripting tests in another room as if they were concocted from a mystical range of powders, unguents and salts, to having a play with the service and talking to the developer next to you, as they write code to pass the team’s scenarios

- from saying “No, this hasn’t met the acceptance criteria”, to saying “Yes, we can deliver these bits and by the way, we don’t need these bits any more”

- from not considering usability and inclusion, to considering usability and inclusion. This means that we can properly communicate with everyone who needs to use our service, and design the interaction so that it’s more enjoyable

I don’t have a background in computer science, so I’ve had to learn this the hard way with a lot of help from my patient colleagues. But we’re lucky to have the space to talk to each other and learn from each other.

QA, with the help of an open and communicative team, gives us confidence that we’re building the right thing, and building the thing right for our users. Good communication ensures quality. Turns out we were all on the same side all along.

2 comments

Comment by Martin posted on

As always Andrew, well done on a thought inspiring piece. However, I wholeheartedly disagree and think the Blog is short-sighted.

Manual Testing is an indispensable part of ensuring high quality software is delivered. Automation Tests can (and do!) help you cut down on release cycle time. But one thing that cannot be automated is usability & human behaviour. I think we live in a ‘Testing World’ where there is an added pressure on Govt Agencies to seek automation alternatives to release quicker and cheaper. We cannot forget that Manual Testing is the main input to Automation scripts! I see it becoming all a little like ‘Rise of the Machines’. Manual tests are best kept ‘manual’ because (and all together now) ‘You cannot automate everything!’

IMO there has been too much focused attention on automated testing the last several years and the importance of automating more. While this is certainly an extremely important element to ensure software quality, automating for the sake of automation can cause significant issues. There are situations where creating automated test scripts do not make sense (as I referred too on some of my recent project calls and the level of testing required for Mobile Services). As a supporter of both approaches within any organisation you need to make sure you have strong manual and automated testing resources

Great manual testing is hard to find. Its taken me years of experience to become a great (some would say not so great) manual tester. Manual testers can find significant defects that developers or automated test scripts will not find so a great tester is worth every penny and more of what they are paid. I personally have found significant defects which would have been catastrophic to the Govt if they were not identified.

It should also ne noted that:

•Our strategists perform extensive analysis to determine what is the best approach, manual testing or automated

•If the test will be performed a few times, manual testing can be a much more efficient option

•In software testing not everything should be automated

•Manual testers will always be an important element of any software testing team

I feel its unfortunate that some people believe that manual testing is obsolete there needs to be a strong balance between having manual and automated testers in order to have a top Quality Testing function

Comment by Andrew Hick posted on

Many thanks Martin for your comments. Although the title is intentionally provocative, manual testing will always be needed. The point I want to make is that in my experience, much of it as a manual tester, early conversations and exploratory testing are often more effective at preventing defects than formal, scripted manual testing.

Manual testing (exploratory or scripted) is a great and often unrecognised skill to have, requiring a particular, questioning mindset. However I've worked in places where scripts are scripted without the ability to question whether the functionality should have been developed that way in the first place.

I too have been sceptical of automation, and agree we shouldn't blindly automate everything - only the boring bits. For example a Cucumber script won't detect poor wording or the wrong font. The most effective testing may be a blend of manual and automated, but there's a third dimension of defect prevention through communication rather than detection. As always, happy to discuss!